Serializable Hyper Parameter (Technical)

SerializableHyperParameter is an annotation used in reading and writing fields used in Activation, Cost Function and Gradient Descender implementations. If you want to make your own custom implementation of either of the three, for your implementation to support serialization, you will need to annotate your fields with SerializableHyperParameter.

Currently, SerializableHyperParameter only supports some mathematical types: namely,

Example: Implementing RProp Gradient Descent

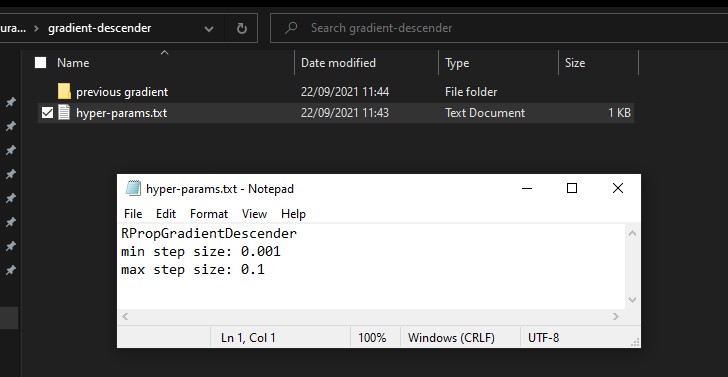

The RProp gradient descent algorithm uses three hyper-parameters:

- the minimum step size (

double _minStepSize) - the maximum step size (

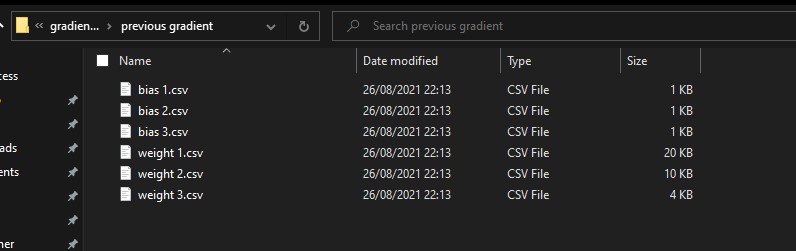

double _maxStepSize) - the previous cost gradient (

Parameter _prevGradient)

Let's make these hyper-parameters serializable:

public RPropGradientDescender : GradientDescender

{

[SerializableHyperParameter("min step size")]

private readonly double _minStepSize = 0.001;

[SerializableHyperParameter("max step size")]

private readonly double _maxStepSize = 0.1;

[SerializableHyperParameter("previous gradient")]

private Parameter _prevGradient = null;

...

}

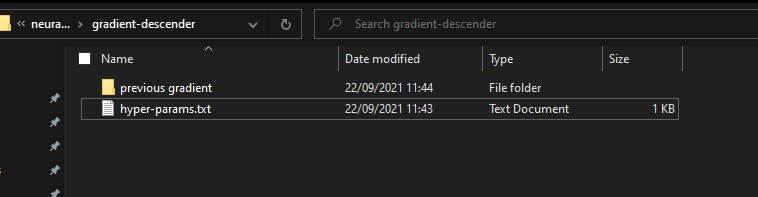

Assume we implement the rest of RPropGradientDescender according to the steps in GradientDescender. If we use RPropGradientDescender in a NeuralNet and write the NeuralNet to a directory, the following output is produced in the "gradient descender" folder: